If you were born in the 1960s or earlier, you and watched any good sci-fi, you probably feel a bit robbed. There are no moon colonies, no hoverboards, and no flying cars. If you were born later, you’d probably pretty happy because your dream of disappearing into your smartphone is nearly here. With that said, we are chipping away at the Turing dream of artificial general intelligence (AGI).

What is AGI? AGI is essentially the next step after artificial intelligence.

AI lets a relatively dumb computer do what a person would do using a large amount of data. Tasks like classification, clustering, and recommendations are done algorithmically. No one paying close attention should be fooled into thinking that AI is more than a bit of math.

AGI is where the computer can “generally” perform any intellectual task a person can and even communicate in natural language the way a person can.

This idea isn’t new. While the term “AGI” harkens back to 1987, the original vision for AI was basically what is now AGI. Early researchers thought that AGI (then AI) was closer to becoming reality than it actually was. In the 1960s, they thought it was 20 years away. So Arthur C. Clarke was being conservative with the timeline for 2001: A Space Odyssey.

A key problem was that those early researchers started at the top and went down. That isn’t actually how our brain works, and it isn’t the methodology that will teach a computer how to “think.” In essence, if you start with implementing reason and work your way down to instinct, you don’t get a “mind.” Today, researchers mainly are starting more from the bottom up.

There are still obstacles to overcome before we achieve AGI. A key challenge is intuition. Part of the reason machine learning and deep learning both require large amounts of data to do tasks that you’re probably able to do with smaller amounts of data is that you can kinda “see it.” Your brain makes intuitive leaps. While this intuition-based leaping is a sort of guess and check, it isn’t exactly the machine equivalent called simulated annealing that is often derided as “boiling the ocean.”

Compare machine learning or deep learning to how a human brain skips some of the training set and make a leap of intuition that lets you perform a task without having done it on 10,000 rows of data. In fact, doing it on 10,000 rows of data isn’t very helpful to an organic computer (that is, a brain); your dendrites don’t grow that fast and your brain is actually programmed to forget minutia.

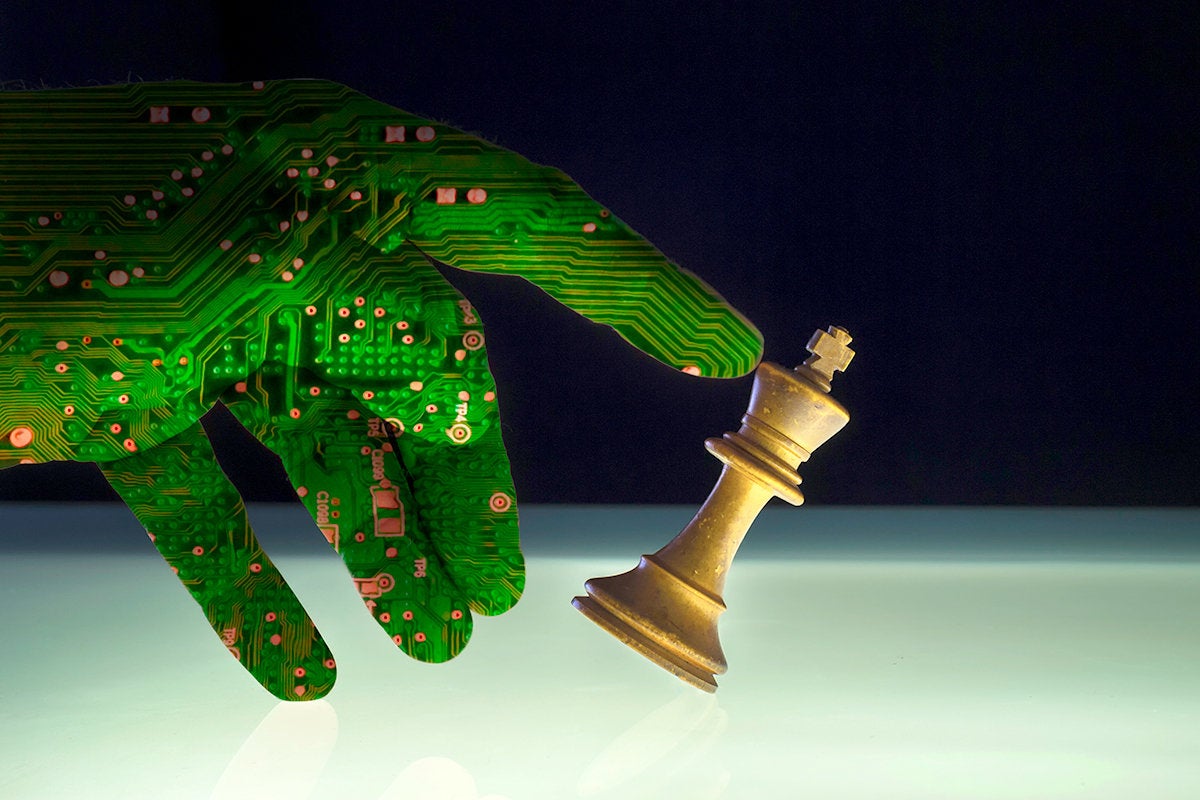

If AGI solves the intuition challenge, computers will be able to do the same thing, skipping the minutia. That is how Google’s AlphaZero beat the current computational chess champion.

Another key challenge is unsupervised learning. As one researcher put it, “Computers need to be more like babies.” Currently, most machine learning is based on spoon-fed data and tasks to a specific algorithm. That isn’t how people learn. A baby starts by putting everything in its mouth, seeing what things feel like and what happens when it does something. Computers don’t do that at all.

Another issue is that computers aren’t very creative. Have you heard IBM’s Watson try and tell a joke? It at best searches one off of the internet, and what it finds isn’t very funny. (Then again, Chris Rock’s latest Netflix special is only half funny, and the rest was just a little sad.)

Meanwhile, a lot of what is going on with deep learning and machine learning is around what I call the mechanics of being: “What is that thing over there?” Facebook has been open-sourcing some interesting software in this area. Before computers can be more like babies, they have to recognize a bottle when they see one. If you’ve used social media, you know that tremendous progress has been made in this area.

Supposedly, this is all headed towards “The Singularity” or maybe Skynet. The utopian idea is that this superintelligence will elevate all of society. There are a different views of what that elevation is, of course. Some people want to upload your brain into a computer to achieve a kind of immortality. Others want computing and people to intermix and become less distinguished from each other. You can glimpse that today with how people regard their phones and how they feel smarter with them because they can Google the things they don’t know.

So, as a developer, where do you get started? Like a baby, pick something that interests you and play with it. Maybe you want to play with Facebook’s detectron and detect something. Maybe you want to analyze how you’re using your phone or laptop and program it to recommend that you do something else. There are wonderful resources for all of this on Udemy, Edx, and Coursera. I also recommend following some of the researchers like Carlos Perez on Medium. Will we reach The Singularity? Beats me, but the revolution in AI is certainly well under way.